webAI and MacStadium(link is external) announced a strategic partnership that will revolutionize the deployment of large-scale artificial intelligence models using Apple's cutting-edge silicon technology.

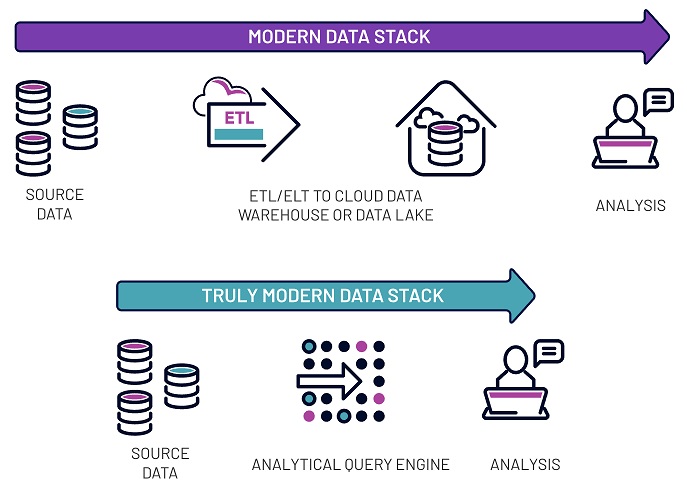

Over the past few years the "modern data stack” has entered the vernacular of the data world, describing a standardized, cloud-based data and analytics environment built around some classic technologies. In its simplest form, this looks like:

1. A data pipeline (ETL or ELT) moving data from its source into an analytics-focused environment

2. A target data warehouse or data lake

3. An analytics tool for creating business value out of the data

This technology stack is based on the fundamental idea that data must be moved away into a centralized location in order to gain value from it. One thing to note, however, is that what we call the "modern data stack” is essentially a re-envisioned cloud-and-SaaS version of the "legacy data stack'' with better analytics tools. What started out as a stack with a database + enterprise ETL tool + analytics-focused storage + reporting system became a modern version of the same functional process.

Despite new cloud-based and SaaS tools, the paradigm remains the same

A Flawed Paradigm

The "modern data stack” is a reimagining of the legacy data flow with better tools. The original stack was largely driven by hardware limitations: production transactional systems simply weren't designed to support an analytics workload. By moving the data from the production system into a replicated analytics-focused environment, you can tailor your data for reporting, visualizations, modeling, etc. However, there are still some pretty serious flaws in both versions:

By moving data away from the source, there is an inherent latency introduced along with complex and fragile data pipelines. Getting back to "real time analytics” can be incredibly challenging and involve large data engineering efforts.

While modern cloud-based data warehouses and data lakes allow for the separation of storage and compute resources and both horizontal and vertical scaling, the true separation of these resources (meaning private storage and shared compute) remains a challenge.

Complex enterprise environments with many operational systems struggle with the idea of bringing all data together in a cloud data warehouse with a common data model - in practice, this rarely works.

The recent focus on tools, rather than functionality, ultimately leads to vendor lock-in and blocks optionality. The bottom line is that there is still a large disconnect between the source data and the final business value.

In thinking about these flaws inherent in the modern data stack, we've started to instead wonder: What is a truly modern data stack?

Imagine you're dropped into a company and asked to build a system to easily access data for the purpose of deriving business value. Your employees are data literate - they understand SQL and want to use that and maybe a visualization tool like Tableau to answer business questions using data. You've got today's modern infrastructure, your production transactional databases are all in the cloud, and you want to separate storage and compute. You're not hardware-bound at all. What would you build?

Introducing the Four S's

Let's focus on what the business user wants:

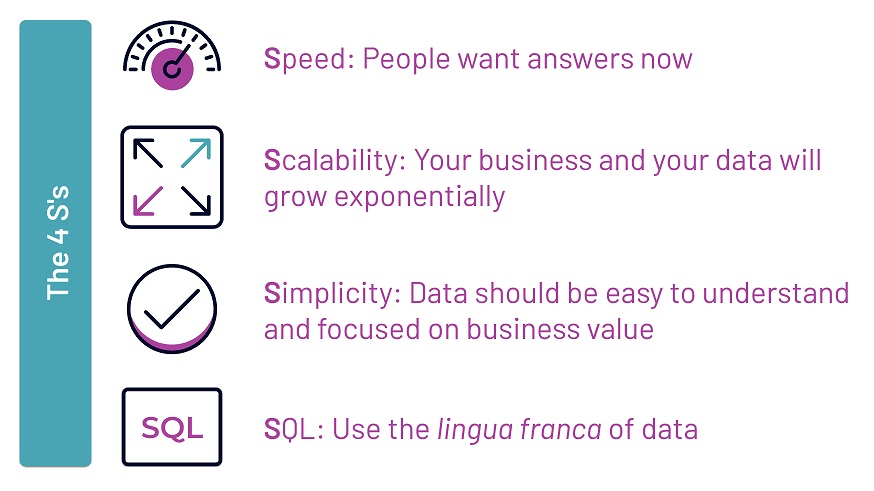

The 4 Ss of data: speed, scalability, simplicity, and SQL

In the end, these are the goals, and you want to focus your architecture and data ecosystem on these principals. If you can achieve each of these "four S's” with your new data system, you'll be a hero!

Building something truly modern

To build a system that meets your goals while focusing on simplicity, start with a product that allows you to run SQL directly on your source data, wherever it lives, without the need for a large effort around data infrastructure. Then add in the analytics layer with a visualization tool, machine learning, etc. This actually isn't drastically different from the modern data stack, but the distance between the data and the value is shortened; in a complex and scaling organization, this efficiency gain can be significant.

The truly modern data stack focuses on the four Ss, reduces latency and complexity, and is vendor-agnostic, ultimately shortening the path between the data and the business value derived from it

Benefits of this simpler stack include reduced batch processing, and the capability to use live or cached source data will reduce latency. Governance including data lineage will also be more transparent with fewer intermediary tools and datastores. The smaller number of tools and storage requirements, not to mention the true separation of storage and compute resources, also lends itself to a streamlined and more cost-effective data ecosystem.

Enter Data Mesh

As complexity and the need for data maturity grows at an organization, often an enterprise will have many different domains with their own unique data ecosystems and analyses. However, when data is a primary factor in business strategy, it's the analysis of data across the organization that brings true exponential power. At this point, companies need to think about a global data strategy, and proactively treat data as a first-class business product, rather than a happy afterthought. The business goal is to embrace agility in data in the face of complexity, and accelerate the time-to-value for data.

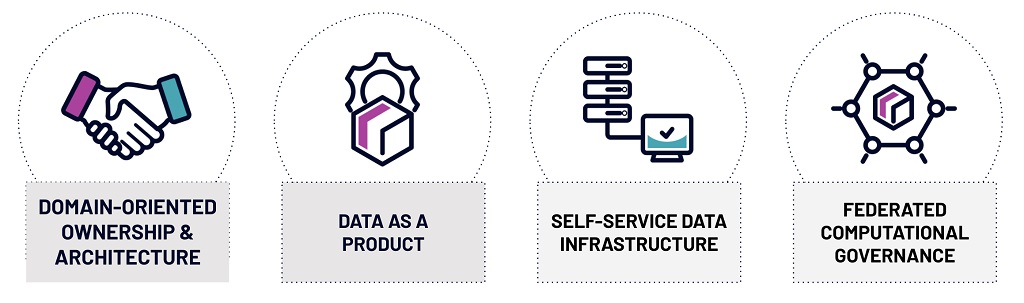

Organizationally and architecturally, Data Mesh marries the ideas of a truly modern data stack with the concept of data as a top-tier product. Data producers treat data consumers as a first-class stakeholder of their work, and the consolidation of the technologies for data consumption brings about a revolutionary simplicity of the data and analytics model at scale. With its guiding tenets, Data Mesh is firmly cementing its place as the future of the business data ecosystem:

Core principles of Data Mesh

While Data Mesh defines a global socio-technical architecture for an enterprise's overall data strategy, there is a place for the "truly modern data stack” within this architecture, as each domain will be required to pull data from the operational plane, transform it for analytics, and provide access in the analytical plane. The transitioning and transformation of that data is itself a data stack, driving the concept of data as a first-class product of a domain at the same level of importance as code. This is a key driver of Data Mesh and an incredibly important concept for the business as it cements the idea of data as a primary concern for both the business and the domain. The "truly modern data stack” can be considered a key piece of the data infrastructure for domains to provide data products in the analytical plane; global data governance marries together these domains' stacks through access control.

What's Next?

The so-called "modern data stack” has its roots in outdated architectures built for antiquated hardware, and stands to be reimagined. The combination of the "four S's” and the four driving tenets of the Data Mesh provides a framework for simplicity and resiliency within a data ecosystem, as well as providing optionality across domains. As many organizations mature their data and analytics strategy, considering all of the data stacks within the company as a whole is an important step.

The goal is to architect a solution that can be used both within the domains as a data product creation technology, and across the domains as an analytical query engine, to create a data ecosystem that combines the "truly modern data stack” with the Data Mesh. A solution that provides a self-service data infrastructure along the one defined as a pillar of Data Mesh can be used cross-functionally in an organization. With a flexible data environment to create data products, query across data products, and even create additional data products from existing data products - the possibility of mirroring a more complex ecosystem is straightforward within this type of product ecosystem.

A Data Mesh incorporating the "truly modern data stack” can raise the bar, streamlining the path between data producers and business value. Providing direct SQL access to a wide array of data sources is key to unlocking the power of data to drive business strategy.

Industry News

Development work on the Linux kernel — the core software that underpins the open source Linux operating system — has a new infrastructure partner in Akamai. The company's cloud computing service and content delivery network (CDN) will support kernel.org, the main distribution system for Linux kernel source code and the primary coordination vehicle for its global developer network.

Komodor announced a new approach to full-cycle drift management for Kubernetes, with new capabilities to automate the detection, investigation, and remediation of configuration drift—the gradual divergence of Kubernetes clusters from their intended state—helping organizations enforce consistency across large-scale, multi-cluster environments.

Red Hat announced the latest updates to Red Hat AI, its portfolio of products and services designed to help accelerate the development and deployment of AI solutions across the hybrid cloud.

CloudCasa by Catalogic announced the availability of the latest version of its CloudCasa software.

BrowserStack announced the launch of Private Devices, expanding its enterprise portfolio to address the specialized testing needs of organizations with stringent security requirements.

Chainguard announced Chainguard Libraries, a catalog of guarded language libraries for Java built securely from source on SLSA L2 infrastructure.

Cloudelligent attained Amazon Web Services (AWS) DevOps Competency status.

Platform9 formally launched the Platform9 Partner Program.

Cosmonic announced the launch of Cosmonic Control, a control plane for managing distributed applications across any cloud, any Kubernetes, any edge, or on premise and self-hosted deployment.

Oracle announced the general availability of Oracle Exadata Database Service on Exascale Infrastructure on Oracle Database@Azure(link sends e-mail).

Perforce Software announced its acquisition of Snowtrack.

Mirantis and Gcore announced an agreement to facilitate the deployment of artificial intelligence (AI) workloads.

Amplitude announced the rollout of Session Replay Everywhere.

Oracle announced the availability of Java 24, the latest version of the programming language and development platform. Java 24 (Oracle JDK 24) delivers thousands of improvements to help developers maximize productivity and drive innovation. In addition, enhancements to the platform's performance, stability, and security help organizations accelerate their business growth ...