Amazon Web Services (AWS) announced the general availability of Amazon Q, a generative artificial intelligence (AI)-powered assistant for accelerating software development and leveraging companies’ internal data.

Testing applications and services in isolation only gets you so far, and eventually you need to test in an operational environment. An "environment-based" approach to testing enables teams to test their applications in the context of all of the dependencies that exist in the real world environment.

Often testers and developers are looking at their deployment environment and its components on an individual basis, testing those pieces in isolation. This works to a certain extent but doesn't test enough of the system. Another version of this is testing at the UI level, at a point where root causes of failures are hard to diagnose. The middle ground is where applications can be tested in as realistic an environment as possible using virtual services to mimic dependencies that aren't available or consistent enough for testing. As more dependencies come online, you can move from virtualized services to live versions.

At Parasoft, we call this an "environment-based" approach to testing, that enables the whole team to test their application with full context of the real-world environment. Tests are deployed into a live, partially virtualized or fully virtualized environment as needed. The environments-based approach to testing provides a stabilized platform for test execution.

Environment-Based Testing

The environment-based approach to testing provides more context, to better understand how dependencies impact the application while UI testing, and helps the team go the next-level deep by creating API and database tests for the dependent components. By doing so, you can achieve more complete test coverage for your application, and decouple testing from the UI, so both can be run together or independently. Test failures are highlighted within the environment, making pinpointing failures easier.

Some additional benefits that come with an environment-based approach to testing include:

■ Shifting testing left by testing the application in the production environment as soon as possible. This is made possible with service virtualization, creating virtual services and then transferring to live services as they become both available and stable.

■ Reducing the time and effort of diagnosing test failuresby pinpointing exactly where and why tests have failed.

■ Easily extending testing from UI testing to API testing, which is configurable in context of the environment, as well as part of a CI/CD pipeline.

■ Increasing test coverageby creating scenarios that wouldn't be able to be implemented from UI testing alone

These benefits reduce the overall burden on testers to setup, run, and diagnose application tests while increasing test coverage and allowing testing to be done sooner, more comprehensively.

Environment-Based Testing in Action

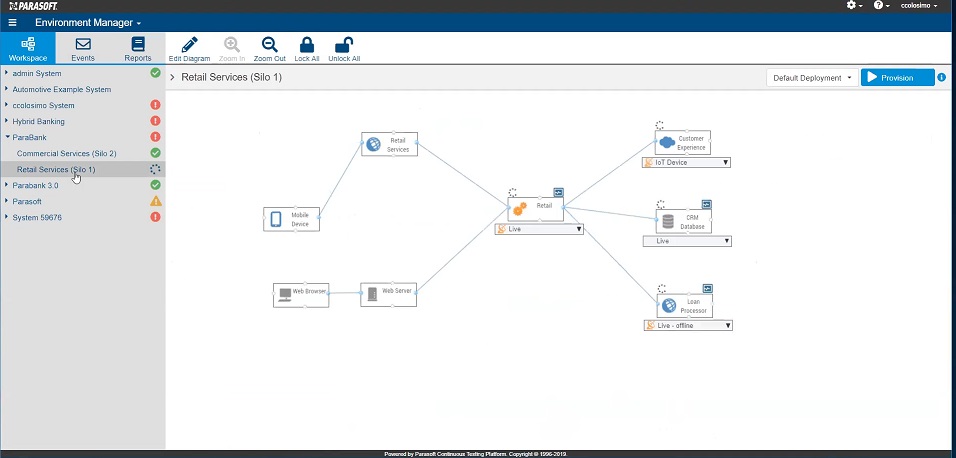

So let's look at this in action. In this instance, a API testing tool supplies an environment manager that visually displays the execution environment for the application under test. Shown below is an example with an application in its environment, connected to dependencies. Each component in the environment can be provisioned as live, or virtualized as needed, depending on the stability and availability of those components:

Click on image above to see larger image

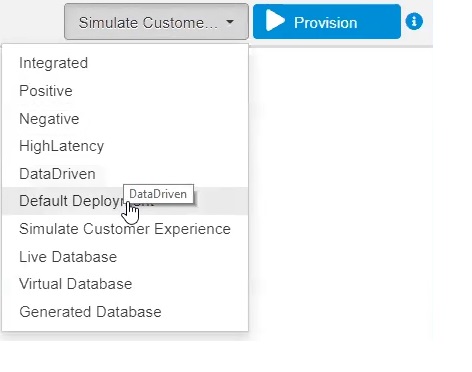

Deployment presets configure the environment based on current test requirements. Different scenario categories may require different presets. These presets contain all the needed settings for the environment manager:

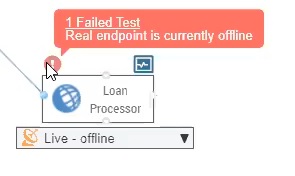

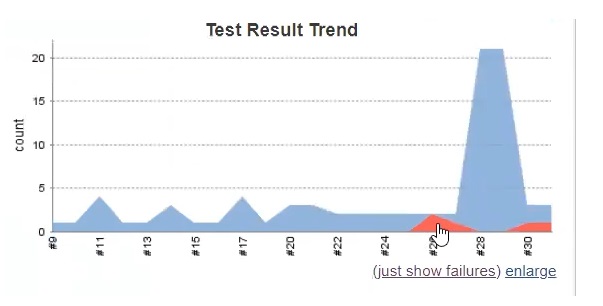

Once an environment is provisioned, a set of API and UI interactions are executed against the application under test. Results are captured, recorded, and compared to expected results within the IDE and in a t web portal, where the environment manager utility acts as the hub for functional testing. Failures are highlighted in the environment manager and it's possible to follow error reports from there into a more detailed report.

The API test reports that are generated indicate both failures and test coverage information. Failures mean that new functionality is broken, or that there's an issue with a test. The root cause of these failures can be traced from the failure here to the appropriate API. Test coverage information is important because it indicates missing tests, and potentially shipping code that is untested.

The Role of Service Virtualization

Errors also arise from missing dependencies. This is where service virtualization comes into play. It's often not possible to have live dependencies available for testing, perhaps because they aren't ready yet or it's difficult and time consuming to duplicate from the production environment. But to assure high quality applications within schedule and budget constraints, it's essential to have unrestrained access to a trustworthy and realistic test environment, which includes the application under test and all of its dependent components (e.g. APIs, 3rd-party services, databases, applications, and other endpoints).

Service virtualization enables software teams to get access to a complete test environment, including all critical dependent system components, as well as alter the behavior of those dependent components in ways that would be impossible with a staged test environment — enabling you to test earlier, faster, and more completely. It also allows you to isolate different layers of the application for debugging and performance testing, but we're not going to get into that as much today.

Service virtualization enables software teams to get access to a complete test environment, including all critical dependent system components, as well as alter the behavior of those dependent components in ways that would be impossible with a staged test environment — enabling you to test earlier, faster, and more completely. It also allows you to isolate different layers of the application for debugging and performance testing, but we're not going to get into that as much today.

Individual services can be configured as live or virtualized. Deployment configurations that are combinations of live and virtualized dependencies can be saved as presets.

Integration with CI/CD Pipelines

Manual testing examples are interesting, but the real work happens in a continuous integration pipeline. Dynamic test environments can be deployed from Jenkins as part of a continuous integration/deployment pipeline. The following example shows a "Deploy Dynamic Environment" as a Jenkins build.

Using a Jenkins plugin, build steps are added to deploy test suites from the build. Results are captured and linked to the environment manager.

After build completion, test results are displayed within Jenkins. Failures link directly back to environment manager and deployment scenario.

Summary

By considering the operational environment first, and leveraging an environment-based approach to testing, software testers can make sure that everything is in place to make testing productive and efficient. Rather than spending time trying to test applications in isolation or with a pseudo-realistic environment, testers can spend more time on the actual testing itself.

Industry News

Red Hat announced the general availability of Red Hat Enterprise Linux 9.4, the latest version of the enterprise Linux platform.

ActiveState unveiled Get Current, Stay Current (GCSC) – a continuous code refactoring service that deals with breaking changes so enterprises can stay current with the pace of open source.

Lineaje released Open-Source Manager (OSM), a solution to bring transparency to open-source software components in applications and proactively manage and mitigate associated risks.

Synopsys announced the availability of Polaris Assist, an AI-powered application security assistant on the Synopsys Polaris Software Integrity Platform®.

Backslash Security announced the findings of its GPT-4 developer simulation exercise, designed and conducted by the Backslash Research Team, to identify security issues associated with LLM-generated code. The Backslash platform offers several core capabilities that address growing security concerns around AI-generated code, including open source code reachability analysis and phantom package visibility capabilities.

Azul announced that Azul Intelligence Cloud, Azul’s cloud analytics solution -- which provides actionable intelligence from production Java runtime data to dramatically boost developer productivity -- now supports Oracle JDK and any OpenJDK-based JVM (Java Virtual Machine) from any vendor or distribution.

F5 announced new security offerings: F5 Distributed Cloud Services Web Application Scanning, BIG-IP Next Web Application Firewall (WAF), and NGINX App Protect for open source deployments.

Code Intelligence announced a new feature to CI Sense, a scalable fuzzing platform for continuous testing.

WSO2 is adding new capabilities for WSO2 API Manager, WSO2 API Platform for Kubernetes (WSO2 APK), and WSO2 Micro Integrator.

OpenText™ announced a solution to long-standing open source intake challenges, OpenText Debricked Open Source Select.

ThreatX has extended its Runtime API and Application Protection (RAAP) offering to provide always-active API security from development to runtime, spanning vulnerability detection at Dev phase to protection at SecOps phase of the software lifecycle.

Canonical announced the release of Ubuntu 24.04 LTS, codenamed “Noble Numbat.”

JFrog announced a new machine learning (ML) lifecycle integration between JFrog Artifactory and MLflow, an open source software platform originally developed by Databricks.

Copado announced the general availability of Test Copilot, the AI-powered test creation assistant.